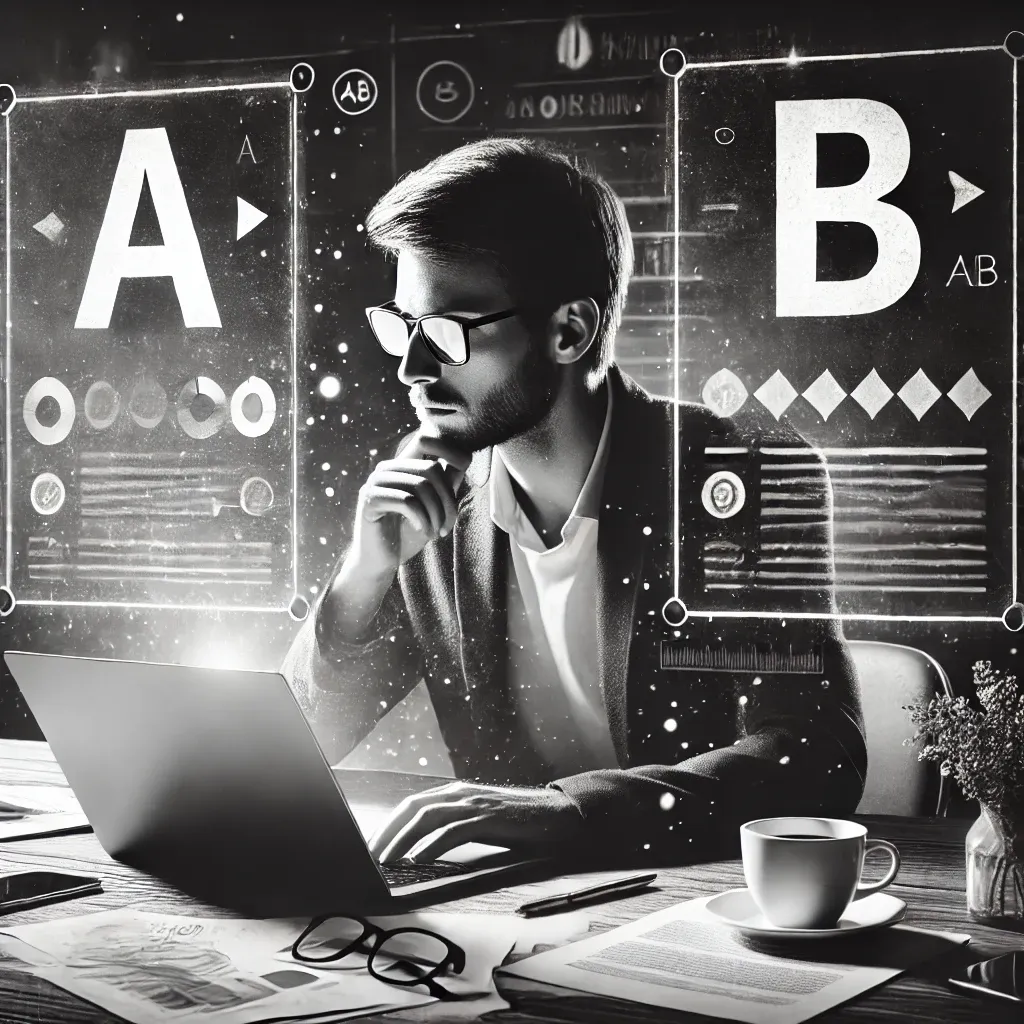

Is A/B Testing Worth It for AI Prompts? (10 Expert Opinions)

Writing prompts for AI tools like ChatGPT isn’t just about typing a question—it’s about crafting something that gets you the best possible response. But how do you know if your prompt is as good as it could be?

That’s where A/B testing comes in.

A/B testing allows you to test two versions of a prompt to see which one performs better.

Whether you’re looking for more accurate answers, engaging outputs, or even creative ideas, this method can help you fine-tune your prompts.

In this article, we’ll explore what A/B testing is, why it matters, and share expert opinions on whether it’s the secret to getting better results from AI.

ALSO READ: What’s Next for AI? 10 Predictions for 2025 to Keep an Eye On

What Is A/B Testing for AI Prompts?

A/B testing is a way to compare two versions of something—in this case, AI prompts—to see which one performs better.

It’s like running an experiment where you tweak one small detail and check which version delivers the best results.

For example, let’s say you’re using an AI tool for customer support.

You could test these two prompts:

1. “How can I assist you with your issue today?”

2. “What specific problem are you facing that I can help resolve?”

The goal is to figure out which prompt gets more accurate or helpful responses from the AI.

By running A/B tests, you can fine-tune your prompts for clarity, tone, and relevance, ensuring they work exactly as intended.

Benefits of A/B Testing AI Prompts

A/B testing isn’t just about trying different ideas—it’s about improving the quality of AI responses.

Here’s why it’s worth considering:

1. Better Output Quality:

Testing helps refine your prompts, so you get more accurate and relevant results from AI.

Example: Changing “Explain this concept” to “Explain this concept in simple terms suitable for a 10-year-old.”

2. Improved Engagement:

For tasks like marketing or customer service, the right prompt can increase user interaction.

Example: Testing prompts for email subject lines to see which gets more clicks.

3. Revealing Hidden Biases:

A/B testing can uncover biases in prompts, helping to create more fair and inclusive outputs.

Example: Testing gender-neutral prompts for job descriptions.

4. Time Efficiency:

Once you’ve optimized your prompts, you spend less time refining outputs manually.

Example: Testing two prompts upfront saves hours of editing responses later.

5. Measurable Results:

A/B testing gives you clear data to show which prompts perform better, making your decisions more informed.

Challenges of A/B Testing for AI Prompts

A/B testing sounds simple, but it can come with its set of challenges.

Here’s what you should be prepared for:

1. Takes Time and Resources

Running effective A/B tests means creating multiple variations and tracking results, which can be time-consuming.

Example: Testing prompts for a marketing campaign might require weeks of data collection to determine the best version.

2. Difficulty in Measuring Success

Unlike straightforward metrics like click rates, measuring success for AI outputs can be tricky, especially for creative or nuanced tasks.

Solution: Define clear goals, such as accuracy, engagement, or user satisfaction, before testing.

3. Overfitting Prompts

There’s a risk of optimizing prompts too much for one specific scenario, making them less effective in other contexts.

Solution: Test prompts across different use cases to ensure versatility.

4. Cost of Tools and Platforms

Some A/B testing tools, especially those for advanced AI applications, can be expensive.

Example: Using premium platforms to run tests might not be practical for smaller projects.

5. Human Interpretation Needed

Even with clear results, understanding why one prompt works better than another requires critical thinking and analysis.

Despite these challenges, understanding and planning ahead can make A/B testing a valuable part of your AI optimization process.

When A/B Testing Is Most Useful for AI Prompts

A/B testing isn’t always necessary, but there are specific scenarios where it’s incredibly valuable. Here’s when it makes the most sense to use it:

1. Customer Support

Fine-tune prompts for AI chatbots to improve response accuracy and customer satisfaction.

Example: Test “How can I help you today?” against “What issue are you facing?” to see which prompt resolves more queries.

2. Marketing Campaigns

Optimize AI-generated ad copy, email subject lines, or social media posts for better engagement.

Example: Compare two prompts for creating an ad headline to determine which one drives more clicks.

3. Educational Content

Create more effective learning materials by testing different explanations or examples.

Example: Test “Explain gravity to a beginner” versus “Explain gravity using an everyday example.”

4. Creative Writing

Experiment with prompts to generate stories, poems, or scripts that align with a specific tone or theme.

Example: Test prompts like “Write a suspenseful thriller” and “Write a story with a surprising twist.”

5. E-commerce Recommendations

Test prompts to improve AI recommendations for personalized shopping experiences.

Example: Compare “Show me popular products” with “Suggest trending items for someone interested in tech gadgets.”

By identifying when and where A/B testing adds value, you can focus your efforts and maximize results.

10 Expert Opinions on A/B Testing for AI Prompts

To understand the value of A/B testing for AI prompts, let's explore insights from industry experts:

1. Craig Sullivan, Founder of Optimize or Die

Craig Sullivan emphasizes that AI can enhance every stage of the experimentation process, from research to test automation.

He notes that integrating AI within A/B testing can help overcome challenges such as orchestrating multiple teams across different countries, thereby increasing test velocity.

2. Ali Abassi, AI for Work

Ali Abassi discusses the use of AI tools like ChatGPT to create A/B testing plans, highlighting that AI can assist in crafting detailed and effective testing strategies.

He provides step-by-step guidance on using AI for A/B testing, emphasizing the importance of clear objectives and success metrics.

3. HubSpot Marketing Blog

The HubSpot team explores how AI can revolutionize A/B testing by providing real-time reports and enabling the testing of multiple hypotheses simultaneously.

They suggest that AI-driven A/B testing makes experimentation smarter and more manageable, offering unique insights into consumer behavior.

4. Kameleoon Blog

Kameleoon's article provides 50 AI prompts specifically designed for A/B testing teams, aiming to enhance product features, user engagement, and marketing channels.

They emphasize the importance of integrating AI into the A/B testing process to improve efficiency and outcomes.

5. Convert.com Blog

Convert.com discusses the incorporation of AI technology into A/B testing tools, noting that AI-driven A/B testing isn't new but continues to evolve. They highlight that AI-powered tools can improve the efficiency of experimentation, making it accessible to businesses without extensive optimization programs.

6. Story22 Blog

Story22 outlines 10 AI prompts that expert marketers should use, including utilizing A/B testing to determine what resonates best with target audiences.

They provide advanced tips on emotional appeal and engagement to enhance response rates.

7. Neptune.ai Blog

Neptune.ai discusses strategies for effective prompt engineering, including techniques like Chain-of-Thought prompting and dynamic prompting. They emphasize that mastering these techniques can lead to more accurate and relevant AI-generated content, which is crucial for successful A/B testing.

8. Open Praxis Journal

An article in Open Praxis explores the "Act As An Expert" technique in AI prompts, aiming to personalize interactions with language models.

This approach can be beneficial in A/B testing by tailoring AI responses to specific roles or specialties.

9. Vogue Business

Vogue Business examines AI hallucinations and their impact on brand trust.

They suggest that A/B testing can help identify and mitigate such errors, ensuring more reliable AI-generated content.

10. The Wall Street Journal

The Wall Street Journal discusses the importance of politeness in AI interactions, noting that A/B testing different prompt phrasings can lead to more effective and user-friendly AI communications.

These expert insights highlight the multifaceted benefits of A/B testing for AI prompts, from enhancing user engagement to ensuring ethical AI practices.

Tools for A/B Testing AI Prompts

To make A/B testing efficient and reliable, the right tools are essential.

These platforms simplify the process, track performance, and provide actionable insights.

Here are some of the top tools for A/B testing AI prompts:

1. OpenAI Playground

A powerful tool to experiment with different prompts and parameters in real-time.

Best For: Testing variations of ChatGPT prompts to optimize accuracy and relevance.

Example: Compare outputs for “Explain renewable energy” vs. “Explain renewable energy in simple terms.”

2. PromptPerfect

Created specifically for prompt optimization, it analyzes prompt variations and refines them for better results.

Best For: Creating precise prompts for both ChatGPT and Gemini.

3. Google Optimize

Integrates with Google Analytics to test content and measure user engagement.

Best For: Testing AI-generated marketing copy and web content.

4. Kameleoon

Offers advanced AI-powered A/B testing capabilities for digital experiences.

Best For: Experimenting with customer-facing prompts on websites or apps.

5. UserTesting AI

Allows businesses to gather feedback on AI-generated content from real users.

Best For: Testing prompts for customer support or educational tools.

6. Optimizely

A robust platform that supports multivariate testing and AI content optimization.

Best For: Large-scale A/B testing projects requiring detailed performance insights.

Using these tools makes the A/B testing process more streamlined, helping you refine prompts and achieve consistent results.

Steps to Conduct A/B Testing for AI Prompts

A/B testing for AI prompts might seem complex, but breaking it down into manageable steps can make the process straightforward and effective. Here’s how to get started:

1. Define Your Objective

Determine what you want to achieve with your prompts.

Example: Are you aiming for clearer responses, higher engagement, or faster task completion?

2. Create Two Variations

Write two slightly different versions of your prompt.

Example:

Prompt A: “Explain renewable energy.”

Prompt B: “Explain renewable energy in simple terms with examples.”

3. Test in a Controlled Environment

Use tools like OpenAI Playground or Google Optimize to test the prompts in identical scenarios.

Ensure variables like audience type and context remain consistent.

4. Measure Key Metrics

Track performance indicators such as response clarity, accuracy, or user engagement.

Example Metrics: Time taken to respond, user satisfaction, or task completion rates.

5. Analyze Results

Compare the performance of the two prompts and identify which one meets your objective better.

6. Refine and Retest

Based on the results, tweak your prompts and conduct additional tests if needed.

Example Scenario:

If you're optimizing prompts for an AI-powered chatbot:

Prompt A might result in faster response times but lower user satisfaction.

Prompt B could take longer but provide more detailed answers.

Your analysis would help decide which prompt aligns with your goals.

A systematic approach ensures you get the most value out of A/B testing and continuously improve your AI prompts.

Conclusion: Is A/B Testing Worth It for AI Prompts?

A/B testing is a powerful tool for optimizing AI prompts, whether you’re improving customer service chatbots, generating marketing content, or refining educational tools.

It helps you identify what works best, leading to better accuracy, engagement, and user satisfaction.

However, it’s not always the only solution. Alternatives like user feedback loops and role-based prompting can be equally effective in certain scenarios.

By combining A/B testing with these strategies, you can unlock the full potential of AI tools like ChatGPT and others.

Is A/B Testing Worth It for AI Prompts? (10 Expert Opinions)

1. A/B testing helps improve the effectiveness of AI prompts by identifying better-performing variations.

2. It provides measurable benefits like increased accuracy, engagement, and fairness in responses.

3. Common challenges include time investment, defining metrics, and overfitting prompts.

4. Tools like OpenAI Playground, PromptPerfect, and Google Optimize simplify the A/B testing process.

5. A/B testing is most impactful for marketing, customer service, and creative projects.