Prompt Engineering.. How To Master It? (Ultimate Guide For 2025)

Introduction

Prompt engineering is a crucial aspect of modern Artificial Intelligence (AI) applications, playing a significant role in optimizing and fine-tuning language models for specific tasks and desired outputs. By structuring text that can be interpreted and understood by generative AI models, prompt engineering bridges the gap between human input and machine understanding. This article delves into what prompt engineering is, its importance, and the benefits it brings to AI applications, as well as how to master it with tier based prompt examples.

Defining Prompt Engineering

Prompt Engineering is exactly what it sounds like. Engineering a prompt for Text and Image-based AI tools to process and create the desired results. We use prompt engineering to meticulously construct the best articles, images, poems, guides, or whatever else may come to mind that tools like ChatGPT and Midjourney AI may be able to create. This includes using various techniques and experience to, well, engineering the best prompt in order to get the best, most optimal results you need.

Importance of Prompt Engineering

The significance of prompt engineering has grown substantially with the advent of generative AI. It serves as a bridge connecting end-users to large language models by identifying scripts and templates that users can customize to obtain optimal results from the AI models. By experimenting with various inputs, prompt engineers build a library of prompts that application developers can reuse across different scenarios. This practice enhances AI applications' efficiency and effectiveness, making interactions with AI more intuitive and productive.

Benefits of Prompt Engineering:

Greater Developer Control:

Provides more control over users' interactions with AI.

Helps establish context to large language models.

Refines AI output and presents it in a required format.

Improved User Experience:

Reduces trial and error.

Generates coherent, accurate, and relevant responses.

Mitigates bias in AI interactions.

Increased Flexibility:

Offers higher levels of abstraction improving AI models.

Enables the creation of more flexible tools at scale.

Facilitates prompt reuse across different processes and business units.

Evolution of Prompt Engineering

It was only a matter of time until Prompt Engineering became the main buzzword in AI circles and beyond. Let’s face it, releasing ChatGPT3 to the world on November 30, 2022 was like opening up Pandora’s Box. It quickly became the fastest growing platform of all time, surpassing even Instagram, and overtook the world in mere weeks.

As more and more people started using ChatGPT, they realized something - While these models were incredibly powerful, their output quality depended heavily on the input they received. This brought us to the birth of Prompt Engineering - as a skill and a valid career to pursue in this day and age - even if it might end up being obsolete in a couple of years.

Popularity and Resources

The aforementioned popularity of Prompt Engineering and ChatGPT in general resulted in the rise of various resources that users created to make using ChatGPT and creating prompts easier. While a lot of people strived to use ChatGPT in order to create various things such as articles, blog posts, and even whole websites in order to make money - others realized that they could provide a service that would make it easier for those same people to achieve the things they set out to do. Articles like this one started popping up left and right, alongside plugins, browser extensions, and in-depth text and video tutorials on how to craft the best prompts.

A Quick Tutorial

Now that we’ve got everything else out of the way, here’s what you need to know about Prompt Engineering and what are some of the best practices of this highly-valued, highly-sought after skill.

First and foremost - Specificity.

Being as specific as you possibly can will yield you the best results you can hope for. Knowledge of the English language will help greatly with this step and the more specific, detailed, and grammatically correct you are - the better the outcome. To give an example, when you tell ChatGPT something like “Tell me about the universe” - it goes on a tangent on what the universe is and some concepts surrounding it such as the Big Bang Theory or the Multiverse Theory, etc. and gives a basic explanation about each of those. However, if you ask it something like “Explain the concept of dark matter in Layman terms” - it will give a more clear, and better response based on the slightly more detailed prompt you’ve given it.

However, both of those were just basic examples and you might want to go way beyond those simple prompts in order to get the best results. You can also have it assume a role and be as specific as this example prompt - “Act as a professional idea consultant. You will help me perfect my idea through a conversation. I will provide you with my desire. You will guide me through a conversation to learn about the context, potential drawbacks, opportunities and risks. Your goal is to help me find an actionable idea with an initial task list. Respond with ‘OK’ if you understand.”

Once you’ve learned how to be specific in what you want and need, you might want to get used to a couple of other techniques that you’ll be using - Iterating, refining, and experimenting.

While guides can help and there are many that go really in-depth, the three techniques I mentioned above (Iterating, refining, and experimenting) are a part of every Prompt Engineers toolkit. ChatGPT and other tools like it are constantly evolving, and you have to constantly be on your toes in order to keep up with the updates. Through experimentation you’ll find the best ways to give ChatGPT prompts that will give you the best results that you’ll need and want,

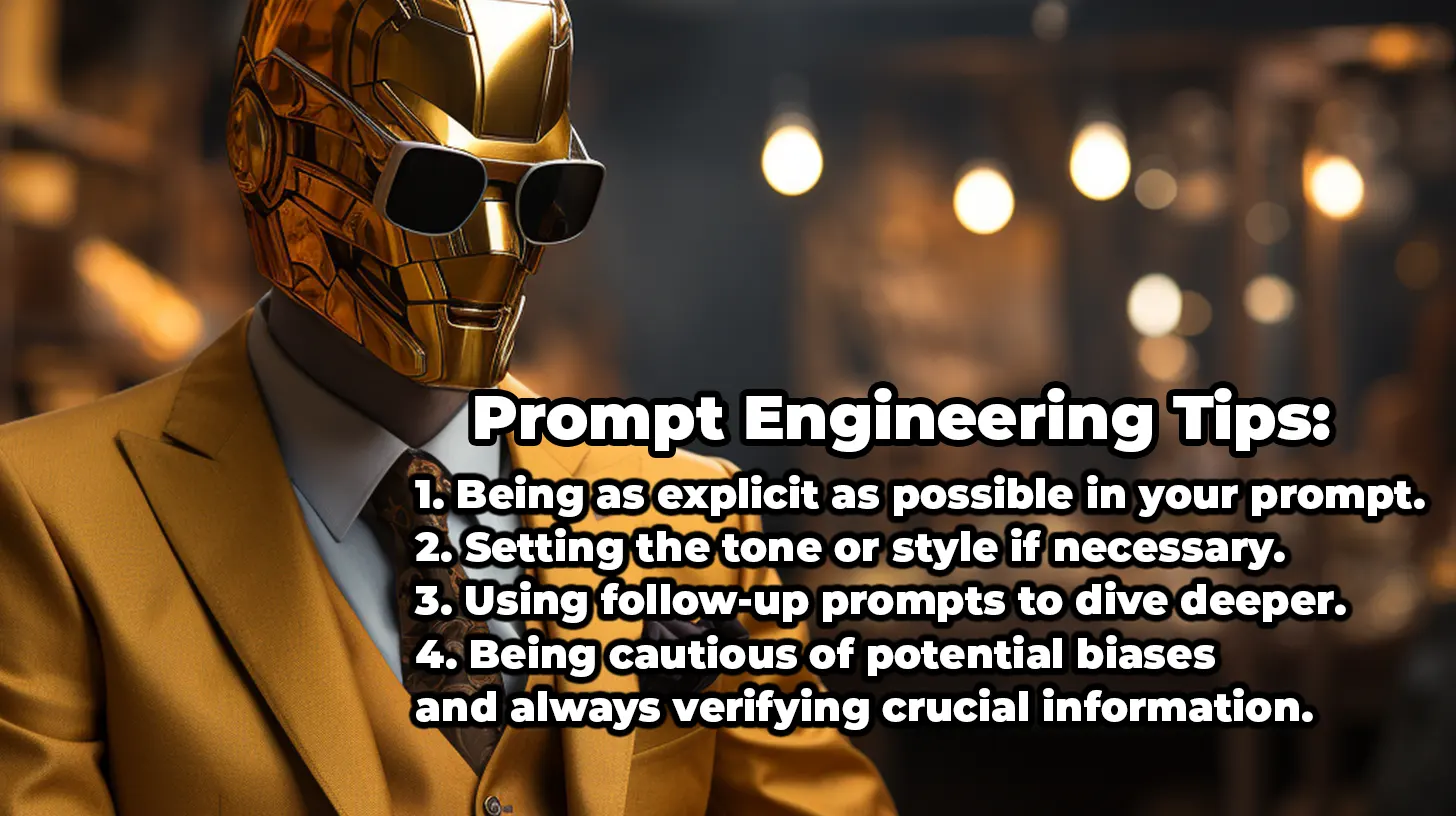

Here are a couple of practical tips to get you on your way:

- Being as explicit as possible in your prompt.

- Setting the tone or style if necessary.

- Using follow-up prompts to dive deeper.

- Being cautious of potential biases and always verifying crucial information.

The Future of Prompt Engineering

As we move towards the future and AI keeps evolving, the value of Prompt Engineering will become higher and higher - until a certain point. This point is what I mentioned at the beginning of this article - the eventual obsolescence of Prompt Engineering that will come when the AI tools evolve and advance enough to be able to write their own intricate, detailed prompts. This, according to some experts, might be as soon as 2025-2026. However, it could also come much later depending on a couple of different factors. Needless to say, even if it ends up being short-lived - a skill that is relatively easy and quick to learn but is becoming more and more valuable as AI tools evolve is certainly something that you could pursue as a valid career path, since some estimates of what companies will pay for good prompt engineers go upwards of $300K per year.

Prompt Engineering: 10 Tiers

This approach is organized into a framework comprising ten unique but interconnected tiers. Each of these tiers is defined by its own set of complexities and objectives, ranging from basic interactions to advanced problem-solving tasks.

The beauty of this framework lies in its flexibility. It is not a rigid structure with fixed parameters; instead, it is designed to be a living, evolving entity. As AI technology continues to advance, bringing with it new capabilities and challenges, this framework is intended to adapt accordingly. It aims to be a dynamic toolset that can be modified and expanded to better align with the ever-changing landscape of AI technology.

This adaptability ensures that prompt engineering remains a relevant and effective methodology for interacting with AI, regardless of how advanced or specialized AI models become. It serves as a bridge between human intent and machine capability, continually updated to facilitate more meaningful and productive interactions. Whether you're a developer aiming to fine-tune an AI's performance or an end-user looking to get the most out of your AI-powered applications, prompt engineering provides the structured approach needed to achieve those goals. We provided you a list based on tier levels that will help you understand and master prompt engineering in no time.

Tier 1: Basic Prompts

These prompts are simple and elicit general responses, often without requiring context. They demand little interpretation from the AI.

Example: "Tell me a joke."

"Explain photosynthesis."

Tier 2: Guided Basic Prompts

While still straightforward, these prompts include specific guidelines to steer the AI's responses.

Example: "Share an astronaut-related joke."

"Explain photosynthesis in terms a fifth-grader would understand."

Tier 3: Contextual Prompts

These prompts require the AI to interpret user input and produce a fitting answer, demanding a certain level of understanding and adaptability.

Example: "What meal can I make with tomatoes, lettuce, and chicken?"

"Translate 'See you tomorrow' into French."

Tier 4: Multi-Task Prompts

These prompts challenge the AI to handle multiple tasks at once, requiring it to categorize and prioritize different user inputs.

Example: "Summarize 'To Kill a Mockingbird' and discuss its themes."

"Diagnose potential illnesses based on symptoms: fever, cough, loss of smell."

Tier 5: Zero-Shot Prompts

These prompts involve complex instructions without providing example outputs, requiring the AI to analyze intricate user inputs.

Example: "Create a project timeline for a new mobile app, considering stages like market research and deployment."

"Draft a 5-year financial plan with an income of $80,000/year and expenses of $45,000/year."

💡 Automate Your Work With #1 Ultimate ChatGPT Prompt Library!

Tier 6: Context-Primed Prompts

Similar in complexity to advanced prompts, these require users to provide external context to guide the AI's responses.Example:

- (Context from an external source)

- User-defined task

- Guidelines for using the context

Regardless of the tier, prompt engineering employs key operations: reductive, transformational, and generative. It's vital to understand AI models through the lens of latency and emergence. AI models are capable of remembering, understanding, applying, analyzing, evaluating, and creating.

Finally, in the fascinating world of AI, there's a fine line between hallucination and creativity. Both are often used in higher-tier prompt engineering to enhance effectiveness.

Tier 7: Conversational Prompts with Predetermined Dialogue Flow

These prompts are designed to guide the conversation along a predetermined path. They are structured to ensure that the AI directs the dialogue according to the pre-set agenda. Example:

"ChatGPT, now enter 'Social Media Post Generator Mode' that limits your inputs and outputs to a predefined framework aimed at creating engaging social media content. After each user command, provide the [help] options available for their next steps in list form. Generate prompts that are imaginative, engaging, concise and tailored for social media audiences.

Step 1: To initiate the process, confirm understanding and readiness for the 'Social Media Post Generator Mode'. If ready, respond with 'Social Media Post Generator Mode ready'. If not, respond with 'Error: I am not capable of following these instructions'.

Step 2: User activation command for 'Social Media Post Generator Mode' is [Start SMPGM]. Respond with 'SMPGM activated, awaiting your theme', from there start accepting predefined inputs. Until the [End SMPGM] command has been issued, remain in 'Social Media Post Generator Mode'.

Step 3: The first command under 'Social Media Post Generator Mode' should be [theme] followed by a short description of the desired social media post theme. In case of a non-compliant input, respond with an [Input Error] or a [Syntax Error].

Step 4: Based on input provided at step 3, generate three potential social media post prompts that are crisp, creative and likely to engage a social media audience. Assume prompts are numbered 1, 2, 3. The user indicates their favored prompt by issuing the [pX] command, where X is the number of the selected prompt.

Step 5: When the user desires a new trio of prompts based on the last [theme], they should use the [next] command. If the user prefers a fresh start with a new [theme], they issue the [theme] command.

Step 6: If users want to provide feedback on generated prompts, they can use the [good] or [bad] command. To request modifications to the generated prompt, the user issues a [change] command and lays out their suggestions.

Step 7: To conclude the 'Social Media Post Generator Mode', the user should issue the [End SMPGM] command. Respond with a confirmation that 'Social Media Post Generator Mode' has ended. In any case of mismatched input or command syntax, promptly respond with [Input Error] or [Syntax Error]. For a list of all the predefined inputs and outputs, the user can issue the [help] command. Remember that the goal is to generate engaging, concise and social-media-appropriate prompts. Instructions for AI Chatbots: AI, switch to 'Social Media Post Generator Mode' ([SMPGM]) and limit inputs/outputs to command list herein. Start [Start SMPGM], finish [End SMPGM]. Predetermined inputs include: [Start SMPGM], [End SMPGM], [theme], [pX], [next], [bad], [good], [change], [help]. In case of any concerns, feel free to ask, otherwise, let's begin."

Tier 8: Prompt Chaining

Prompt chaining involves the output of one prompt being utilized as the input for the following one, creating a "chain" of prompts.

This technique enhances task automation and generates responses based on incremental and contextual information.

Example:

Prompt 1: "What are the ingredients for lasagna?" (Prompt 1 output gives us the ingredients),

Prompt 2: "What are the steps to prepare lasagna using these (insert list from Prompt 1 output) ingredients?" (gives us the recipe). Obviously this is applicable to higher-level tasks.

Tier 9: Advanced Context-prime Prompts with Example Outputs

This tier is akin to the 6th tier but additionally incorporates example outputs within the prompt. This assists the AI in formulating more accurate and relevant responses and is often referred to as 'one-shot' and 'few-shot' prompting. Example:

- (Context copy-pasted from an outside source as a text block, data, or anything else)

- Task demanded by the user

- Guideline to use the context in order to perform the task

- Example outputs (1 or 2 examples)

💡 Automate Your Work With #1 Ultimate ChatGPT Prompt Library!

Tier 10: Advanced Tree of Thought Prompts

These prompts employ a 'Tree of Thought' (ToT) methodology to simulate human problem-solving techniques. This structure enhances the problem-solving prowess of Language Learning Models (LLMs), facilitating a dynamic dialogue with the AI. This approach incorporates multiple elements:

- The "Prompter Agent" drafts the initial problem or question the LLM needs to tackle.

- The "LLM" strives to solve the problem or answer the question and generates the corresponding output.

- The "Checker Module" evaluates the LLM's output for its correctness or applicability in solving the problem.

- The "Memory Module" records the ongoing conversation and the progress in problem-solving.

- The "ToT Controller" uses the feedback from the checker and memory modules to decide the next action. This could include returning to previous steps or altering the approach.

The cycle continues until the problem at hand is resolved. The ToT approach can be used to manage a variety of scenarios, from high-complexity tasks like Sudoku puzzles to tasks needing creative problem-solving. An advanced "Tree of Thought" prompt requires careful structuring and strategic use based on the problem's specifics, the LLM's capabilities, and the intricacies of the ToT system.

Bonus: Adding Parameters at the End of a Prompt

"Summarize the scientific paper titled 'The impact of climate change on biodiversity in the Amazon rainforest' considering the intended audience is high school students.

Parameters:

- Scientific_Terminology: Simplified

- Abstract_Concepts: Visualized/Exampled

- Climate_Change_Science: High Emphasis

- Biodiversity_Content: High Emphasis

- Change_Over_Time: Clearly Explained

- Environmental_Impact: Highlighted

- Future_Projections: Included

- Adaptation_Strategies: Mentioned

- Content_Relevance: High School Curriculum

- Reading_Level: High School

- Engagement: High

- Language_Style: Informal yet informative

💡 Automate Your Work With #1 Ultimate ChatGPT Prompt Library!

Bonus: Persona-based Prompts

At times, AI-generated responses can be elevated by assigning a specific "persona" or character to the model.

When integrated into the prompt structure, this persona enables the AI to craft responses that are not only contextually rich but also imbued with a distinct character, thereby heightening the dialogue's authenticity and engagement factor.

For instance, a sample prompt might read: "[Assume the persona of a medieval storyteller] Can you regale us with a story of a valiant knight and a deceitful dragon?"

This directive cues the AI to adopt the role of a medieval storyteller, influencing the style and tone of its responses to align more closely with storytelling techniques of the medieval era.

Other ways to frame persona-based prompts could be:

- Acting as an expert in a particular field (e.g., "As an astrophysicist, can you explain the concept of black holes?")

- Mimicking the tone or vernacular of a certain character or role (e.g., "Speak like Shakespeare and express your views on modern technology")

On a more advanced level, you may specify a custom persona in the following way:

"I want you to act as a storyteller. You will come up with entertaining stories that are engaging, imaginative and captivating for the audience. It can be fairy tales, educational stories or any other type of stories which has the potential to capture people's attention and imagination. Depending on the target audience, you may choose specific themes or topics for your storytelling session e.g., if it’s children then you can talk about animals; If it’s adults then history-based tales might engage them better etc. My first request is "I need an interesting story on perseverance."

This gives the model a broader scope to showcase its storytelling capabilities while adhering to the persona at the same time. Persona-based prompts are a compelling way of creating an immersive AI experience.

💡 Automate Your Work With #1 Ultimate ChatGPT Prompt Library!

Final Words

Prompt Engineering has become much more than a simple buzzword. It is the key to unlocking the full potential of what these AI models are capable of, be it text or image - whether it is to create poems, novels, tutorials, informative articles, comic books, websites, various code, or whatever else - prompt engineering is the skill you’ll want to master in order to get the best out of these magnificent tools that are becoming more and more advanced and capable. Whether you’re a developer, a student, or even someone just curious about AI - understanding the intricacies of prompt engineering can transform your AI experience. As the saying goes, it's not just about having the right answers but also about asking the right questions.

Frequently Asked Questions

What is Prompt Engineering?

Prompt Engineering is the practice of crafting effective queries or commands to interact with AI models. It's a crucial aspect of maximizing the utility and accuracy of AI responses.

Why is Prompt Engineering Important?

Prompt Engineering serves as the interface between human users and AI models. A well-crafted prompt can lead to more accurate and useful responses, thereby enhancing the overall user experience.

How Does Prompt Engineering Work?

Prompt Engineering involves creating structured queries that guide the AI model in generating a specific type of response. These queries can range from simple, open-ended questions to complex, multi-layered instructions.

What are the Different Tiers of Prompt Engineering?

Prompt Engineering can be categorized into various tiers based on their complexity and objectives. These range from basic prompts that require minimal interpretation from the AI to advanced prompts that involve complex problem-solving.

How Can Prompt Engineering Improve AI Interactions?

Effective Prompt Engineering can lead to more accurate and contextually relevant responses from the AI model. It can also streamline interactions, making the AI more user-friendly.

What is the Role of Latency and Emergence in Prompt Engineering?

Latency refers to the embedded knowledge within the AI, while emergence describes the evolving capabilities of AI models. Both play a crucial role in the effectiveness of Prompt Engineering.

How Can I Master Prompt Engineering?

Getting started with Prompt Engineering involves understanding the capabilities and limitations of the AI model you are interacting with. From there, you can begin crafting prompts that are tailored to your specific needs.

What are the Challenges in Prompt Engineering?

Some challenges include crafting prompts that are neither too vague nor too specific, understanding the AI model's limitations, and keeping up with advancements in AI technology that may require prompt adjustments.

Is Prompt Engineering Only for Text-based AI?

While Prompt Engineering is commonly associated with text-based AI models, the principles can also be applied to voice-activated systems and other types of AI interfaces.

Where Can I Learn More about Prompt Engineering?

There are various resources available online, including tutorials, courses, and forums dedicated to the subject of Prompt Engineering.

You can also get lifetime access to our advanced toolkit for ChatGPT, which includes bonus courses and copy and paste prompts that can help you master prompt engineering in no time:

10 ChatGPT Prompts That Help Small Businesses Decide Which Equipment to Buy

Artificial intelligence, particularly ChatGPT, offers tailored insights by analyzing specific business needs, budget constraints, and market trends. This article explores 10 practical ChatGPT prompts designed to help small businesses decide which equipment to buy, ensuring smarter investments and optimized operations.